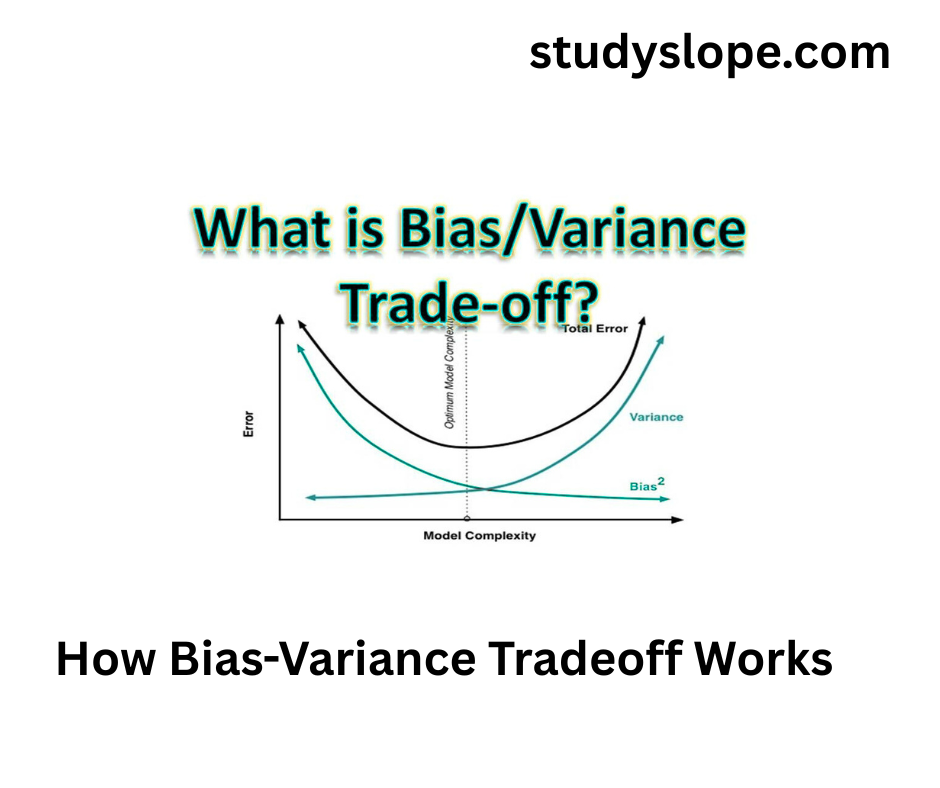

Bias-variance tradeoff

What is Bias-Variance Tradeoff? The bias-variance tradeoff is a key machine-learning concept that describes model bias and variance. The difference between a model’s predictions and the target variable’s true value is bias. Variance measures a model’s forecast variation across data samples. High-bias models make systematic errors, while high-variance models make random errors. Models with low bias possess high variance … Read more