Table of Contents

What is dirty data? Provide 3 different types of dirty data and suggest data pre-processing steps for each.

Data that is wrong, missing, inconsistent, or otherwise flawed in a way that makes it hard to use for research or making decisions is called “dirty data.” It could be caused by a number of things, such as human mistakes, system bugs, or problems integrating data.

Types of Dirty Data

Three Types of Dirty Data and Steps to Clean Up Data:

Missing Data: The term “missing data” refers to the absence of values, records, or information.

Imputation, deletion, and enrichment of metadata are included in the pre-processing step.

Inconsistent data: Data that is inconsistent may have problems with its structure, format, or coding.

Standardization, schema alignment, and code mapping are all included in the pre-processing step.

Duplicate Data: There are three types of duplicate data: exact, fuzzy, and record-level.

Removing duplicates, fuzzy matching, and record linkage are all part of the pre-processing step.

Also View: What-is-Backpropagation-Algorithm

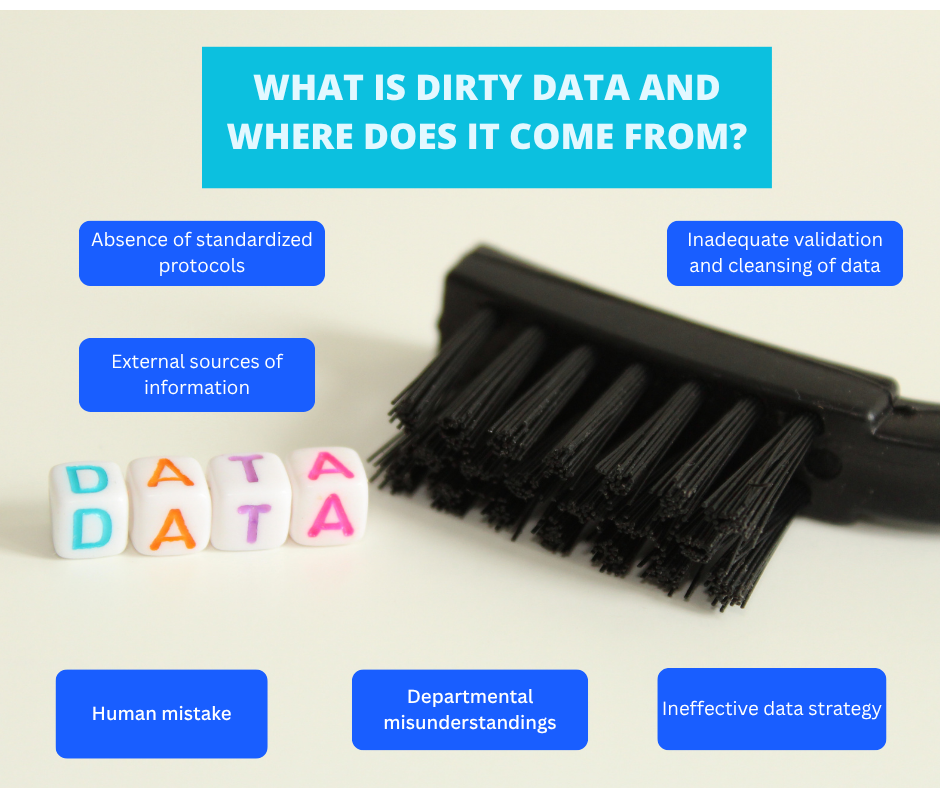

What Is Dirty Data and Where Does It Come from?

There are numerous ways in which inaccurate data might manifest. For direct understanding, you must be aware of the sources of inaccurate data. Some common reasons for inaccurate or inconsistent statistics are as follows:

Human mistake: Data entry is a tedious and repetitive procedure; thus, mistakes are inevitable. Possible causes include ambiguous directions, typos, or just omitting some entries. These inaccuracies, which are usually small, might build up over time and cause major differences.

Departmental misunderstandings: Another common cause of skewed data is poor or nonexistent communication between different departments. Inadequate communication between departments is the cause of 35% of unusual data. When information is transferred to another division in an inefficient manner, this happens. The problem of dirty data typically arises during data transfers across departments since various departments often function in data silos, with their own approaches to data processing, structure, and storage.

Ineffective data strategy: Part of the problem with faulty data is that the company doesn’t have a good data strategy for managing its data. Human error during data entry, improper data merging, or the use of rudimentary technology for data access, storage, or administration can all lead to dirty data.

Absence of standardized protocols:In the absence of defined protocols, many individuals or organizations may employ varying methods or presentation styles for the same data. This difference could lead to discrepancies and mismatches when different data sets are examined or combined.

Inadequate validation and cleansing of data: Databases need continuous reviews and cleaning to maintain data quality. Errors could persist and even worsen without these protections, leading to a decrease in data quality over time.

External sources of information: When importing data from external sources, it is important to assess the quality of the source. Without adequate validation, this external data could corrupt the existing database.